My journey in Artificial Intelligence began in 2004, rooted in Computer Engineering. Initially, my work focused on products utilizing Machine Learning for predictive applications. That focus broadened to include Generative AI, and now, a deeper concentration on the agentic world. Today, my team enables enterprise customers to architect and deploy complete, end-to-end workflow solutions using App Agents at Microsoft.

Power Platform is fundamentally reshaping how product development teams work. For design teams, this demands a critical shift: adopting a Behavioral Design-first mindset when building AI products.

Recently, I reconnected with a designer who used to work on my team. Her questions cut to the core: “What do you mean by that? What are the tangible outcomes you need from designers in 2026?” That conversation sparked this post—hopefully a guide for Product Designers navigating their path forward.

Here’s what you should understand: AI is not taking your job away. It is expanding your perimeter. After reading this, you may realize you can do much more than you’re doing today. But this evolution is up to you.

While our roles are constantly changing, you remain in control. You decide if this new professional identity aligns with your values. Do not let the market alone determine what your day-to-day life should look like.

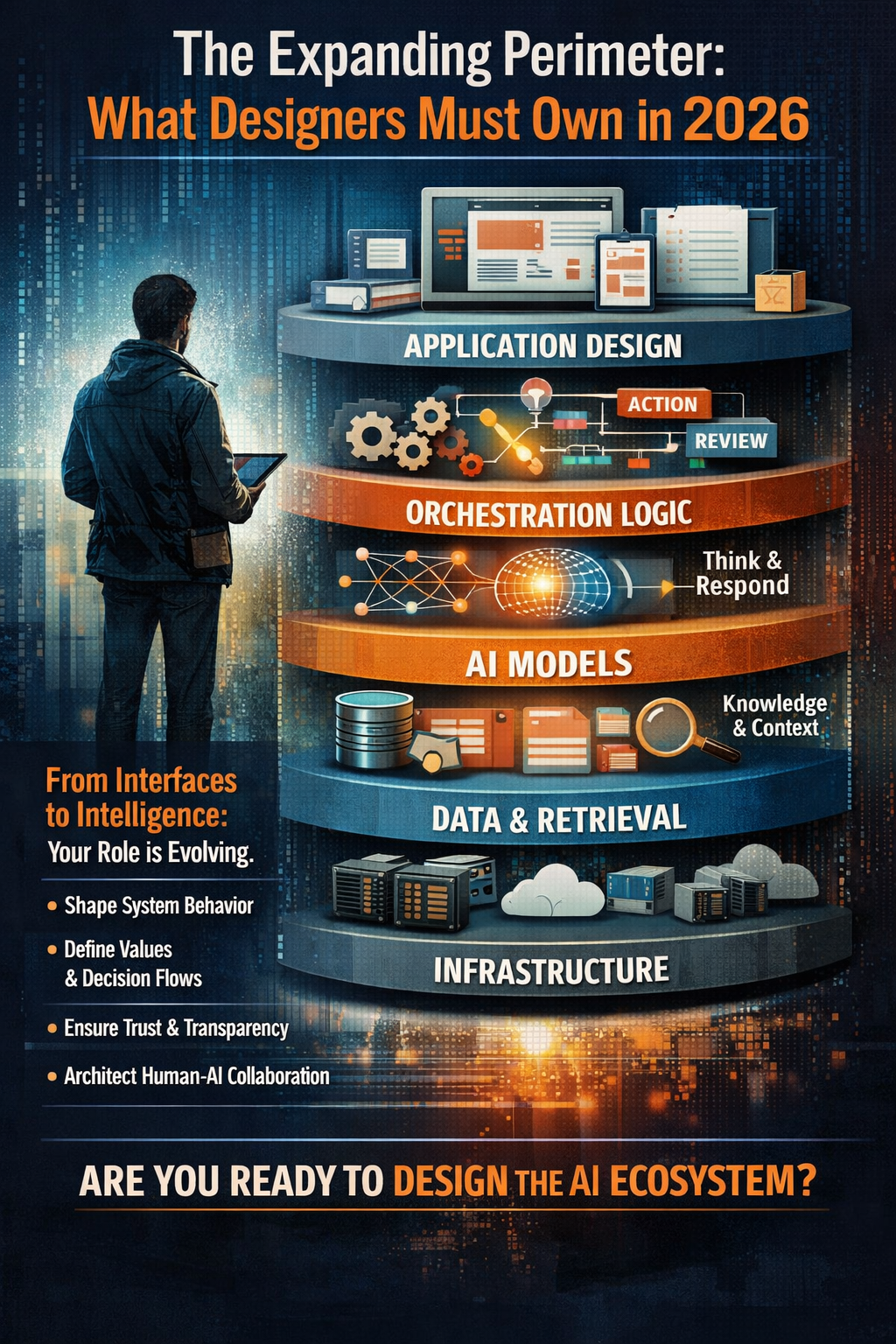

Designing Across the AI Stack: Your New Territory

Let me break down where designers create value across the modern AI stack. These are the layers where your decisions directly shape system behavior and human experience.

1. Infrastructure / Hardware

What it is: Where AI runs—GPUs, deployment models (cloud, on-prem, on-device), and the performance, privacy, and reliability constraints that shape the system.

Role of design: Translate human experience needs (latency, trust, privacy, failure tolerance) into non-negotiable system constraints. Design for degradation when infrastructure fails.

Example: When inference fails, what happens?

- Do we retry silently?

- Do we ask the user?

- Do we fall back to a smaller model?

- Do we switch to read-only mode?

These aren’t engineering questions. These are behavioral design decisions that determine trust and usability.

2. Models

What it is: The underlying intelligence. Foundation or specialized models with different reasoning depth, speed, accuracy, and behavioral tendencies.

Role of design: Define the intended behavioral posture of the system. Should it demonstrate confidence or humility? What’s its risk tolerance? The model you choose must align with how the product should think and respond under pressure.

This is where you establish personality and judgment at the foundational level.

3. Data (RAG, Pipelines, Vector Stores)

What it is: External knowledge that supplements the model through retrieval, grounding outputs in up-to-date or domain-specific information.

Role of design: Shape how knowledge is surfaced, cited, and trusted. Make uncertainty, source quality, and freshness legible to humans rather than silently implied.

Users need to understand what the system knows, how it knows it, and how current that knowledge is. This transparency is a design responsibility.

4. Orchestration

What it is: The logic that breaks problems into steps: planning, tool execution, self-review, and escalation across complex tasks.

Role of design: Design decision flows and autonomy boundaries. When should the system ask, act, slow down, self-correct, or hand control back to a human?

This is where you define the rhythm of human-AI collaboration.

5. Application

What it is: The user-facing layer: interfaces, interaction modes, revisions, citations, and integrations where humans experience the AI’s behavior.

Role of design: Create judgment-centered interfaces that support review, correction, reversibility, and trust. Ensure humans remain oriented, in control, and accountable.

The application layer is where all your architectural decisions become visible and tangible.

Two Critical Layers: Where Behavioral Design Happens

Let me go deeper on two layers where designers have the most direct influence on AI behavior:

1. The Model Layer: Influencing Priors and Normative Stance

At the model level, designers influence the Priors—where core values, behavioral defaults, and posture are encoded.

Encoding Values and Defaults: You embed the fundamental behaviors that define the system’s character:

- Conservative vs. proactive

- Ask-first vs. act-first

- The overall system posture (think Anthropic’s Constitutional AI as a reference point)

Defining Normative Stance: You establish what constitutes “harm” in your product’s context and when uncertainty must be surfaced to the user. This isn’t philosophy—it’s product specification.

2. The Orchestration Layer: Influencing Prompts, Resources, and Tools

Within the orchestration layer (particularly MCP servers), designers shape the system’s operational conduct through three key elements:

Prompts (How to Think): Define operational judgment and reasoning posture:

- The system’s role and reasoning structure

- Escalation logic (when to defer to humans, when to invoke tools)

- Tone under duress (handling errors, refusals, and uncertainty)

Resources (What to Know): Define what information the system can access and how it contextualizes that knowledge.

Tools (What Can Be Done): Define the actions the system can take. Critical here: identify agency boundaries and design for reversibility.

Surfacing reversibility—undo, preview, dry-run, explain—is perhaps your most powerful lever for building trust. When users know they can reverse, preview, or understand an action, they engage more confidently with autonomous behaviors.

What This Means for You

The designer’s role in 2026 is not about making AI interfaces, I’m sorry but it is still the most prevalent discussion out there. It’s about architecting behavior across the entire stack—from how models think to how systems escalate, from what knowledge gets surfaced to how humans stay in control.

This is behavioral systems design at scale.

Your new perimeter includes:

- Defining system values and behavioral defaults

- Architecting decision flows and autonomy boundaries

- Making AI reasoning transparent and actionable

- Designing for trust, reversibility, and human judgment

- Ensuring accountability remains with humans

The question isn’t whether AI will change your role. It already has. The question is: Will you expand into this new territory, or will you let others define what design means in this AI-heavy world?

The choice, as always, is yours.

Where to Start

If this resonates with you but you’re wondering where to begin:

- Study the stack. Understand the layers beyond the UI. Your design decisions need to be architecturally informed.

- Learn the language. Engage with engineering and ML teams on their terms. Understanding model behavior, retrieval systems, and orchestration logic is now table stakes.

- Design for judgment, not just experience. Every interaction is an opportunity for human judgment. Design interfaces that support thoughtful decision-making, not just efficiency.

- Advocate for transparency. Push for systems that show their work, reveal uncertainty, and make reasoning legible.

- Own behavior, not just pixels. Your responsibility extends from how the system thinks to how it acts under pressure.

The expanding perimeter is real. The opportunity is immense. The future of design leadership depends on those willing to claim this new territory.

Are you ready?