AI products are shipping faster than our ability to reason about their long-term effects on people.

We obsess over performance metrics. We debate hallucinations and alignment. We track adoption curves and time-to-value.

But there’s a quieter risk that rarely shows up in dashboards: AI systems can scale outcomes while slowly degrading human agency, judgment, and adaptability.

This erosion doesn’t come from malicious intent. It comes from over-optimization.

When a product “works better” if humans think less, decide less, and defer more, human capability becomes collateral damage.

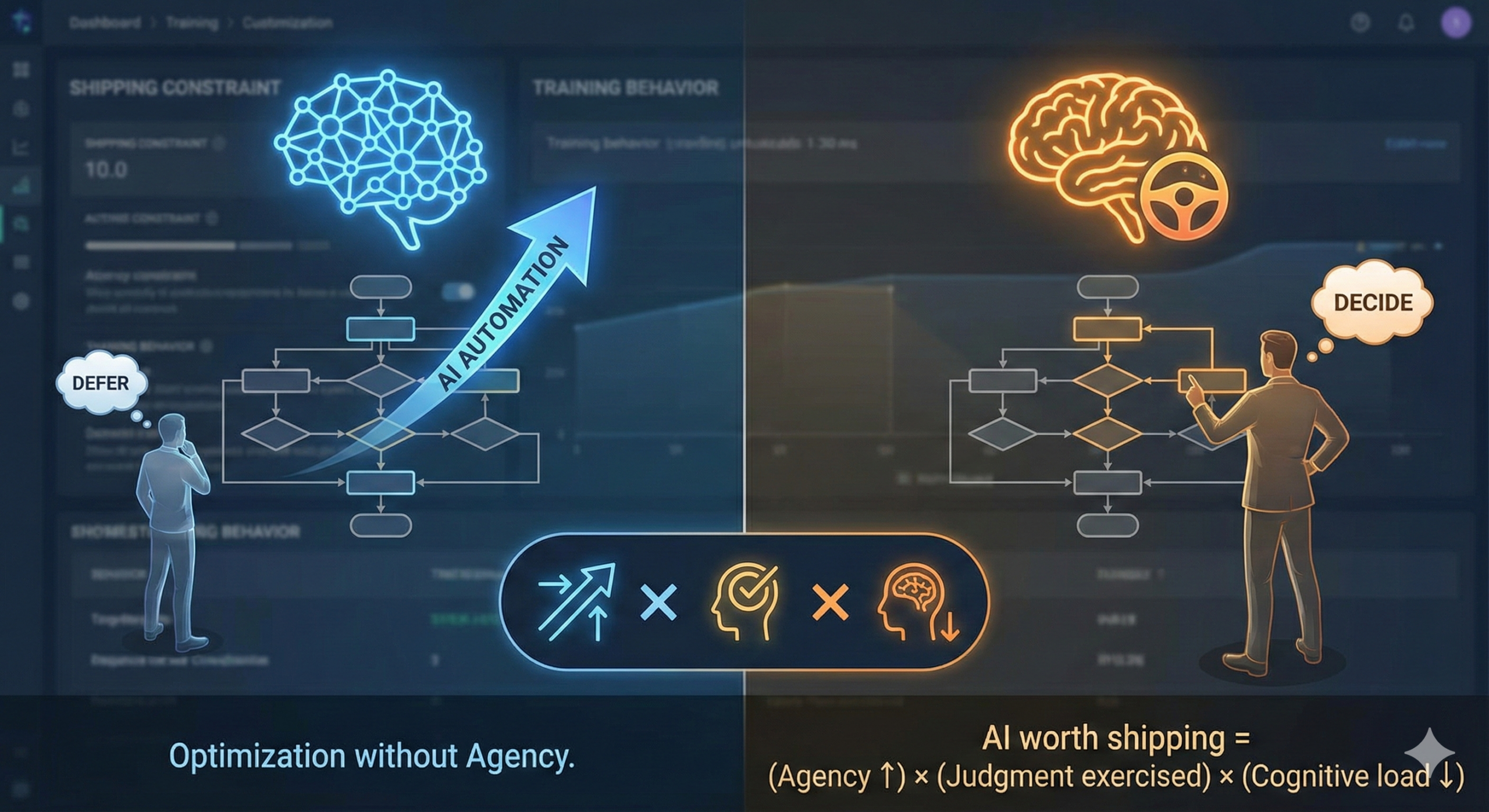

So I’ve been working on a shipping constraint for AI products. Something as explicit as security or privacy reviews. A formula you can actually use.

The Human Preservation Formula

AI worth shipping = (Agency ↑) × (Judgment remains exercised) × (Cognitive clarity ↑)

This should be a leadership constraint.

If any term collapses to zero, the system may still look successful in your metrics, but it’s training humans in the wrong direction.

Let me break down each term and show you how to measure it without pretending we can quantify wisdom.

1. Agency ↑

Agency means humans retain the ability to choose, steer, and refuse without penalty.

It’s not about having an “undo” button. Agency exists when humans understand system boundaries, can change direction without friction, and aren’t nudged into compliance by clever defaults.

How agency erodes

It happens when:

- Defaults become invisible mandates

- Reversibility disappears silently

- Opting out carries social, economic, or workflow penalties

You’ve seen this. The AI that’s “helpful” by auto-completing your email, but makes it socially awkward to write your own. The system that “streamlines” decisions by removing alternatives from view.

How to measure agency

Reversibility Ratio (RR)

What percentage of AI actions are fully reversible versus irreversible or costly to undo?

If irreversible actions can happen without explicit human approval, agency is already compromised.

Override Friction Score (OFS)

How much time and how many steps does it take to inspect alternatives, modify recommendations, or decline?

If “accept” is one click and “change” requires navigating six screens, you’ve engineered obedience to AI, not agency.

Autonomy Boundary Clarity (ABC)

In user testing, ask: “What will the AI do automatically?” and “What will it never do without your approval?”

Measure the percentage of users who can answer correctly.

If people can’t articulate boundaries, they don’t have agency, they will just be surprised.

2. Judgment Remains Exercised

Humans are still required to think, decide, and take responsibility at the moments that matter.

Judgment isn’t clicking “approve.” Judgment is forming intent under uncertainty. Weighing trade-offs. Deciding when notto act. Owning outcomes when stakes are real.

You don’t measure judgment directly. You measure whether the system still requires it.

The Judgment Remains Exercised (JRE) Index

I use a composite: JRE = IFR × MDR × IGS × ARS

If any component trends toward zero, judgment is being outsourced.

Intent Formation Rate (IFR)

How often must humans explicitly define goals, constraints, and success criteria before AI acts?

Low IFR means the AI acts on inferred intent, and humans stop thinking early. Without proper time to form intent, judgment becomes optional, and humans become lazy.

Meaningful Divergence Rate (MDR)

How often do humans intentionally deviate from AI recommendations, by modifying, partially accepting, or rejecting suggestions with a reason?

A healthy MDR doesn’t mean your AI is bad. It means humans are engaged. Judgment exists where disagreement is normal and safe.

Irreversibility Gating Score (IGS)

Do high-stakes, hard-to-undo actions require human ownership?

Identify irreversible decisions around money, access, compliance, reputation. What percentage require explicit human confirmation and review of assumptions?

AI should act freely where mistakes are cheap—and stop where they aren’t.

Accountability Recall Score (ARS)

Can humans explain decisions after the fact?

Ask users: “Why was this decision made?” “What alternatives existed?” Review logs and audits for visible rationale and human-attributed accountability.

If the answer is “the system decided,” judgment has already been outsourced.

3. Cognitive Clarity ↑

Complexity is absorbed by systems, not pushed back onto people.

AI often claims to reduce cognitive load while quietly increasing it through constant prompts, micro-decisions, and notifications.

True cognitive relief doesn’t mean fewer tasks. It means fewer decisions, interruptions, and context switches.

How to measure cognitive load reduction

Decision Burden Delta (DBΔ)

What’s the change in the number of decisions required to complete a workflow?

If AI introduces dozens of “review this” moments, you fragmented load.

Interruption Rate (IR)

How many prompts, alerts, or nudges per workflow or per day?

Helpful once. Corrosive at scale.

Context Switch Cost (CSC)

How many tools, surfaces, or views are required to finish a task?

If users must bounce across systems to “collaborate” with AI, humans become the integration layer, and that’s a design failure.

Why This Formula Matters

The AI curve is accelerating faster than human readiness. Topic I’m exploring in the upcoming book The AI Leader Mindset

Without explicit constraints, product teams will optimize for speed, automation, and engagement, and unintentionally train passivity, over-reliance, and loss of judgment under pressure.

These effects don’t show up in quarterly metrics. They show up years later in leadership failures, brittle organizations, and humans who no longer trust their own thinking.

The Design Responsibility We’re Avoiding

Every AI product trains behaviors.

Are you training discernment or compliance?

AI that preserves agency, judgment, and human capacity may feel slower to adopt, less magical in demos, harder to sell internally. But it compounds trust, resilience, and leadership over time.

What This Means for You

We don’t need less AI. We need AI shipped with human-preservation constraints, where agency is protected, judgment is exercised, and complexity is absorbed by systems, not people.

If we don’t measure these things explicitly, erosion becomes the default.

At this speed, defaults in AI systems can reshape, or erode, society before we notice.